20 AI Myths That Deserve a Reality Check

You’ve heard the hype. Maybe even believed a few things you read online. AI is everywhere. But what if some of the loudest claims about it just aren’t true? At Premium Websites, Inc., we want small business owners to feel informed, not overwhelmed. Let’s separate fiction from function.

Written by Dotty Scott

Founder of Premium Websites, Inc.

Empowering small businesses to go from Invisible to Invincible.

Privacy and Security

AI can feel like a black box. This group of myths clears up what really happens to your data and how to stay in control.

#1 It’s Private

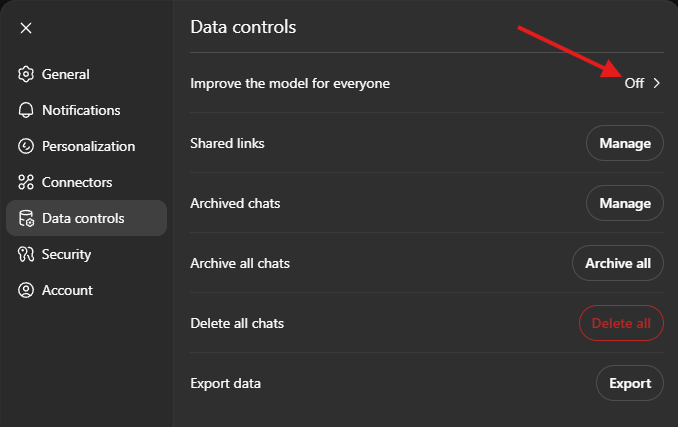

By default, OpenAI stores your chats to train and improve future versions of its models. This means your conversation could be reviewed, even if anonymously, unless you disable that setting. You can turn off data sharing in your ChatGPT settings, but most users don’t realize it’s on by default.

If you’re working with anything sensitive like financial data, internal strategies, or client information, treat the chat window like a whiteboard in a public hallway. Don’t assume privacy. Consider using secure platforms, or switch to a plan with enterprise-grade privacy controls.

AI chats are helpful for brainstorming and general support, but they’re not secure repositories. Always think twice before sharing confidential data.

#2 It’s Safe for Confidential Files

Think twice. Most mainstream AI platforms, including ChatGPT, store uploaded documents temporarily for quality control and abuse prevention. Unless you opt out of data-sharing or use an enterprise-level plan, your content could be retained for up to 30 days.

If privacy matters for contracts, medical records, client notes, or sensitive projects, assume it’s not private by default. Some platforms offer enhanced privacy settings, but you have to enable them manually. A secure business plan may allow for local data processing or private model use, but these typically come at a higher cost.

Until you’re sure of how your platform handles data, treat every upload like it’s viewable by others. That doesn’t mean AI is unsafe but it does mean you need to know the settings and the fine print.

#3 You Own the AI-Generated Images

When you generate images using AI tools like ChatGPT’s DALL·E or similar platforms, you’re generally granted usage rights. However, this doesn’t mean you’re free from liability.

If the image includes recognizable trademarks, brand elements, or the likeness of a real person, especially a celebrity, you could still face legal issues. These tools don’t automatically detect trademark or likeness conflicts, so it’s on you to do that homework.

Before using AI-generated visuals in marketing or client projects, run a reverse image search or consult with a licensing expert. Public domain and royalty-free image libraries remain safer options when unsure.

#4 It Owns Your Uploaded Images

You retain rights to anything you upload. But that doesn’t mean you’re in the clear when remixing content that wasn’t originally yours. Uploading or altering images that contain copyrighted material like stock photos, logos, or celebrity likenesses can still lead to legal trouble.

Even AI-generated derivatives can be risky if they closely resemble protected images. If you’re unsure, always verify licensing or use images from public domain or royalty-free libraries.

AI doesn’t grant legal immunity. You’re still responsible for how the content is used and where it came from.

Cost and Access

AI isn’t free magic. These myths cover what you really get at different price points and what’s under the hood.

#5 It’s 100% Free

Some AI tools offer free access, but it’s usually limited in speed, memory, or features. For example, ChatGPT’s free tier provides access to GPT-3.5, which is suitable for basic writing and idea generation. It does not include features like file uploads, image generation, or real-time browsing.

Upgrading to the $20/month ChatGPT Plus plan unlocks GPT-4. This version features stronger writing, supports additional tools like DALL·E for images, has expanded memory, and enables internet search capabilities.

There are also higher-tier plans, such as ChatGPT Team and Enterprise. These are geared toward organizations that require collaboration tools, enhanced privacy protections, and increased processing capacity. Each AI platform will have different pricing, so always check what’s included before subscribing.

#6 It Uses Google for Searches

Actually, ChatGPT uses Microsoft’s Bing search engine when its browsing feature is enabled. That means the search results and sources it pulls from can differ significantly from what you’d see using Google. This can impact the relevance of the information, depending on your query.

Some AI tools utilize custom engines or those provided by other companies entirely. Knowing the source of your AI’s data is crucial in determining when to verify facts or seek a second opinion from a trusted search engine.

Limits of Understanding

AI is powerful, but it’s not human. These myths explain where the understanding ends and the guessing begins.

#7 AI Knows the Entire Internet

It doesn’t. Unless you enable its browsing feature, ChatGPT operates from a fixed dataset that includes public information, such as books, Wikipedia, Reddit, and transcripts. The training stops in June 2024. It won’t find your latest blog post unless you give it the link.

#8 AI Understands Context Like Humans Do

Not quite. AI models, such as ChatGPT, interpret language by analyzing patterns and probabilities. They understand context based on the words you use not your tone, history, or emotions.

If you say “cold” after talking about weather, it assumes temperature. If it’s after someone ignores you, it might miss the nuance. AI doesn’t learn from lived experience the way people do.

This is why responses can feel off or robotic. To achieve better results, clearly spell out the situation. AI performs best when guided with specific details and clear direction.

#9 AI Can Replace Emotional Intelligence

It can imitate caring words, but it doesn’t feel or relate to humans in the same way. Emotional intelligence requires self-awareness, empathy, and the ability to read unspoken cues. AI doesn’t have any of that.

AI might respond with phrases like “That must be difficult,” but it doesn’t understand difficulty. It’s repeating patterns based on language, not emotional truth. In settings such as therapy, leadership, or caregiving, that absence can make a significant difference.

You can use AI to script messages or role-play tough conversations, but don’t rely on it to guide real emotional decisions. People still do that better.

#10 It Has No Bias

Bias exists in its training data, just like it does across the internet. AI learns from massive amounts of content; books, websites, forums, and articles, all created by people with opinions, worldviews, and cultural influences.

This means bias isn’t just possible, it’s inevitable. Some perspectives are overrepresented, while others are left out. That affects how AI answers sensitive questions about race, politics, gender, or history.

Prompts shape results, too. You might get different answers by simply rewording a question. To use AI more responsibly, be aware of its training roots, use diverse input styles, and cross-check answers from multiple viewpoints.

#11 It Gets Humor

Humor relies on timing, context, and tone, things AI doesn’t fully grasp. While it can mimic joke structures, it often misses nuance or misreads sarcasm. ChatGPT might reproduce a pun or deliver a dad joke, but it can also completely misinterpret something as serious when it’s clearly meant to be funny.

Humor is tied to culture, mood, and lived experience. AI lacks all three. It might catch a pun like “Why did the scarecrow win an award? Because he was outstanding in his field,” but stumble on wordplay that relies on tone or double meaning.

You can train it to follow joke formats, especially when given examples. But if you’re expecting wit or original comedic timing, you’re better off writing your own punchlines.

It once confused a knock-knock joke for an actual security issue.

Performance and Reliability

This section addresses the practical side like how well it remembers, responds, and reasons.

#12 It Remembers Everything

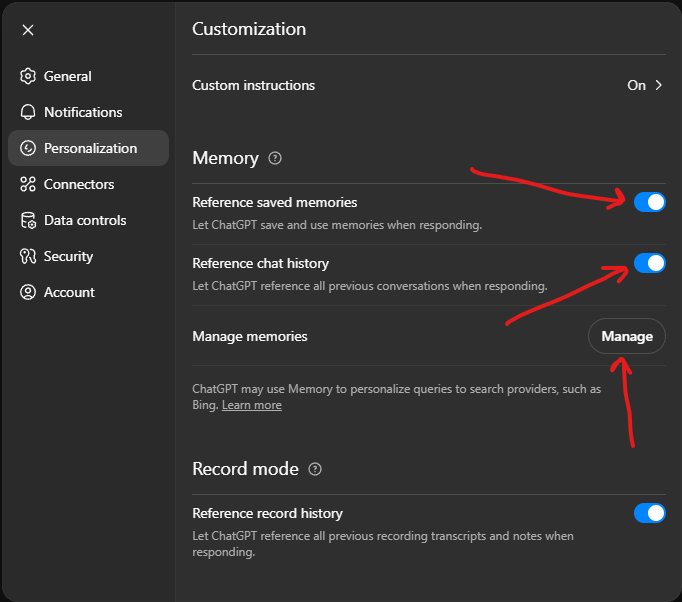

AI memory doesn’t work like human memory. Tools like ChatGPT can store up to 100,000 tokens in a single session, which translates to approximately 75,000 words. But once that session ends, unless memory is enabled, it forgets everything.

Even with memory turned on, the AI doesn’t recall your entire conversation history. It remembers a few details you’ve explicitly shared like your name, preferences, or goals. And even those can be edited or deleted by the user at any time.

This limitation protects your privacy but also means you need to reintroduce context in each session. For ongoing projects, saved notes or copied prompts may be more effective.

#13 It Never Makes Stuff Up

Sometimes it does. AI models like ChatGPT are trained to predict the next word, not to confirm facts. When there’s missing or ambiguous information, it may generate what sounds like a reasonable answer even if it’s entirely made up.

This phenomenon is called a hallucination. It can include incorrect statistics, invented quotes, or even fake academic studies. The output often sounds confident, which makes errors harder to detect.

To avoid misinformation, always treat AI-generated content as a draft. Double-check facts, dates, and sources before sharing or acting on any advice. Think of AI as a brainstorming partner, not a final editor.

#14 It Predicts the Future

AI can process patterns and past data, but it doesn’t actually know what’s ahead. It isn’t connected to the future any more than a spreadsheet is. What it does well is spot trends, echo predictions made by experts, and create logical scenarios based on existing information.

For example, you can ask an AI what might happen to small business marketing in five years. It will offer ideas based on current tools, user behavior, and economic commentary. But it’s not reading tea leaves. It’s remixing what’s already known.

If you need strategy, ask for a range of possibilities instead of a definitive answer. The best future-planning still comes from people who understand context and consequences.

#15 AI Works the Same for Everyone

It doesn’t. AI tools respond based on how you ask, not who you are. If two people phrase the same question differently, they’ll likely get different results even if they mean the same thing.

Word choice, detail, and context shape the output. A casual tone may yield brief answers, while a technical one might prompt more complex responses. Even asking in different languages or from different cultural viewpoints can shift the outcome.

This is why prompt engineering has evolved into its own distinct skill. Understanding how to ask effectively is the key to getting what you need from AI.

#16 More Data Means Better Answers

Not always. Many assume that more input equals better output, but that’s not how AI works. If your prompt is too long, overly detailed, or ambiguous, the model may struggle to figure out what matters most.

AI performs best when given clear, structured prompts. One or two concise sentences with specific goals usually yield more accurate and helpful responses than lengthy paragraphs crammed with context. When in doubt, simplify and prioritize your request.

You can always build complexity in layers. Start with a short question. Then add detail once you see how the AI responds. This back-and-forth approach tends to produce better results than front-loading everything at once.

One of the earliest AI hallucinations? A chatbot confidently said Abraham Lincoln drove a Tesla. Yes, really.

Human Value and Responsibility

AI can’t replace what makes us human. These myths explain where the human edge still matters most.

#17 It Replaces Experts

AI can assist, not replace. It lacks lived experience, intuition, and the ability to interpret gray areas. While it can summarize information, suggest next steps, or outline a solution, it won’t understand exceptions, client context, or emotional nuance.

In fields like law, medicine, education, or creative strategy, professionals apply judgment built over years. AI can support these tasks, but it doesn’t make final calls. For example, it might generate a basic contract, but a lawyer needs to tailor it to jurisdiction, risk, and intent.

ChatGPT and similar tools work best when paired with human expertise. They enhance efficiency but don’t carry responsibility. The expert remains essential.

#18 It Can’t Be Flirty

It can mimic flirtation to a degree, but those responses are heavily filtered. AI is programmed with guardrails to avoid crossing boundaries or enabling inappropriate behavior.

Some users have attempted to form emotional or romantic relationships with AI bots. This is becoming a concern for mental health professionals, especially when people begin substituting human connection with AI interaction.

The truth is: AI doesn’t feel. Flirty responses are pattern-matched phrases based on language, not emotion. They’re designed to keep users engaged, not form attachments.

Use AI to write clever text messages, maybe. But remember it’s not a person. It’s software designed to simulate conversation, not return affection.

#19 It Can’t Harm You

Misuse can lead to real problems. If you trust AI answers blindly or treat them as fully accurate, you can end up spreading false information. This happens more often than most users realize.

Misinformation spirals begin when people use AI-generated facts in social posts, documents, or decisions without verifying their accuracy. If one wrong answer gets repeated or cited, it gains false credibility.

There’s also emotional harm. Some users become overly dependent on AI for reassurance or decision-making, which can cause anxiety, especially when the model provides inconsistent or vague responses.

AI is a powerful tool but it’s not a replacement for human thought, research, or empathy. Think of it as a helpful draft assistant, not a source of absolute truth.

#20 It Makes You Lazy

This one depends entirely on how you use it. AI can either make you passive or help you be more productive. When used intentionally, it saves time on routine tasks such as writing drafts, answering common questions, or formatting content, so you can focus on the more meaningful aspects of your work.

But like any tool, if you let it do all the thinking, your skills can stagnate. It’s not a substitute for learning or judgment. Treat it as a shortcut for repetitive work, not as a creative replacement.

The smartest users leverage AI to move faster, not avoid thinking. It’s the difference between using a calculator and understanding math.

Final Thoughts

AI isn’t replacing us. It’s a tool. Useful, fast, and helpful when used right. It can’t replicate experience, judgment, or emotion. At Premium Websites, Inc., we guide business owners in using tools like ChatGPT wisely, not fearfully.

FAQ

Can AI write all my blog posts?

It can help draft them, but human editing is still needed to align with your voice and brand.

Is the paid plan worth it?

If you’re writing or researching regularly, yes. You’ll get more advanced tools and faster responses.

Can I use AI with my WordPress site?

Yes. It’s great for generating outlines, revising drafts, or brainstorming content.

Will AI make web designers obsolete?

Not at all. It can’t replace human insight, visual skills, or client relationships. It’s a supplement, not a substitute.

What’s the best way to learn AI?

Use it like you would a curious intern. Ask questions. Give it feedback. Test different prompts.

Curious how AI fits into your website and SEO strategy? Let’s talk. Premium Websites, Inc. supports business owners who want clarity, not chaos.

The post 20 AI Myths That Deserve a Reality Check appeared first on Premium Websites, Inc..

Original post here: 20 AI Myths That Deserve a Reality Check

Comments

Post a Comment